In the world of software development, ensuring smooth application delivery and scalability is paramount. This is where DevOps comes in, aiming to bridge the gap between development and operations teams. But even within DevOps, achieving seamless deployments and handling unexpected traffic surges can be a challenge.

Enter the hero of this story: the load balancer. This nifty piece of technology plays a crucial role in creating a robust and efficient DevOps environment. Let’s delve into how load balancers work their magic and why they’re essential for a well-oiled DevOps workflow.

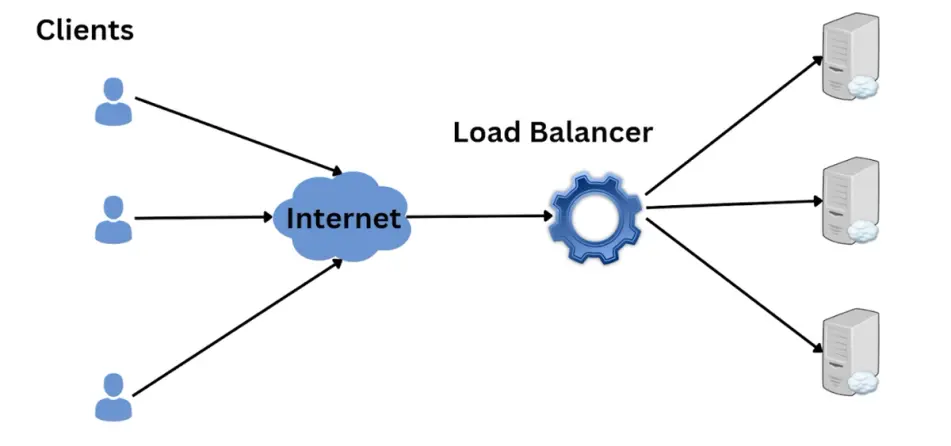

Understanding Load Balancers: The Traffic Cop

A load balancer acts just as a traffic cop does, sitting in front of your application servers, and directing the incoming traffic to the servers. When a user requests access to your website or application, the load balancer receives that request. It then distributes the traffic across multiple servers in your infrastructure, ensuring no single server gets overloaded.

This distribution can be based on various factors, such as server health, processing power, or even the type of request. The goal is to optimize performance and ensure a seamless user experience.

Benefits of Load Balancers in DevOps

Now that we understand the basic functionality of load balancers, let’s explore how they specifically enhance the DevOps environment:

- Automated Deployments: Imagine deploying a new version of your application. Traditionally, you might shut down servers one by one, update them, and bring them back online. This process can be time-consuming and disruptive. With a load balancer, you can automate deployments. The load balancer directs traffic away from servers undergoing updates, minimizing downtime and ensuring a smooth rollout.

- Scalability Made Easy: As your application grows in popularity, traffic surges can become a real concern. Manually adding servers to handle increased load is cumbersome. With a load balancer, scaling becomes effortless. You can easily add new servers to your infrastructure. The load balancer automatically detects these new servers and starts distributing traffic accordingly.

- High Availability: Imagine a scenario where one of your application servers crashes. With a traditional setup, your entire application might go down. However, a load balancer acts as a single point of entry. If one server fails, the load balancer automatically redirects traffic to the remaining healthy servers, ensuring your application remains available to users.

- Improved Resource Utilization: Load balancers provide valuable insights into server performance and resource utilization. This data helps DevOps teams identify potential bottlenecks and optimize their infrastructure effectively. By using resources more efficiently, you can potentially save costs while ensuring optimal application performance.

- Simplified Disaster Recovery: Load balancers can be configured to work with multiple data centers or cloud environments. In case of an outage in one location, the load balancer can seamlessly route traffic to the backup location, minimizing downtime and data loss.

Different Types of Load Balancers

There are two main types of load balancers commonly used in DevOps environments:

- Layer 4 Load Balancers: These operate at the network layer (Layer 4) of the OSI model. They primarily distribute traffic based on factors like IP addresses and ports. Layer 4 load balancers are efficient and ideal for high-volume traffic scenarios.

- Layer 7 Load Balancers: These operate at the application layer (Layer 7) of the OSI model. They can make more intelligent decisions about traffic distribution based on factors like URLs, content type, and even user cookies. Layer 7 load balancers offer more granular control but might have slightly higher processing overhead.

The choice between these types depends on your specific application requirements and traffic patterns.

Integrating Load Balancers into Your DevOps Workflow

Here are some key considerations for seamlessly integrating load balancers into your DevOps workflow:

- Infrastructure as Code (IaC): Utilize IaC tools like Terraform or Ansible to automate the provisioning and configuration of your load balancer along with your application servers. This ensures consistency and reduces manual configuration errors.

- Monitoring and Alerting: Set up monitoring tools to track the performance of your load balancer and application servers. Implement alerts to notify your team in case of any issues like server failures or performance bottlenecks.

- CI/CD Pipeline Integration: Integrate your load balancer configuration into your CI/CD pipeline. This allows you to automatically configure and update your load balancer as part of your application deployment process.

By leveraging load balancers, DevOps teams can achieve automated deployments, effortless scaling, high availability, and optimized resource utilization. This translates to smoother application delivery, a more resilient infrastructure, and ultimately, a happier user experience.

Beyond the Basics: Advanced Load Balancing Techniques

While we’ve covered the core functionalities of load balancers, there’s more to explore for those seeking to optimize their DevOps environment further:

- Health Checks: Configure your load balancer to perform regular health checks on your application servers. This ensures that only healthy servers receive traffic, preventing users from encountering errors due to malfunctioning servers.

- Session Persistence: Certain applications require users to maintain a session throughout their interaction. Load balancers offer session persistence features that ensure users stay connected to the same server during their session, even if the load balancer distributes them across different servers for other requests.

- SSL/TLS Termination: Load balancers can handle SSL/TLS encryption and decryption, offloading this task from your application servers and improving overall performance.

- Advanced Routing Techniques: Modern load balancers offer advanced routing techniques like content-based routing or path-based routing, giving you more control over how traffic is distributed.

By exploring these advanced features, DevOps teams can further enhance their infrastructure’s efficiency and user experience.

The Final Word: Embracing Load Balancers for a Robust DevOps Environment

Load balancers are an essential tool in the DevOps toolbox. Their ability to automate deployments, simplify scaling, and ensure high availability streamlines the application lifecycle management process. By integrating load balancers effectively, DevOps teams can achieve a more reliable and scalable infrastructure, ultimately delivering a superior user experience.

So, the next time you think about DevOps, remember the unsung hero – the load balancer. It plays a crucial role in ensuring your applications run smoothly and efficiently, allowing you to focus on what matters most – building and delivering exceptional software.